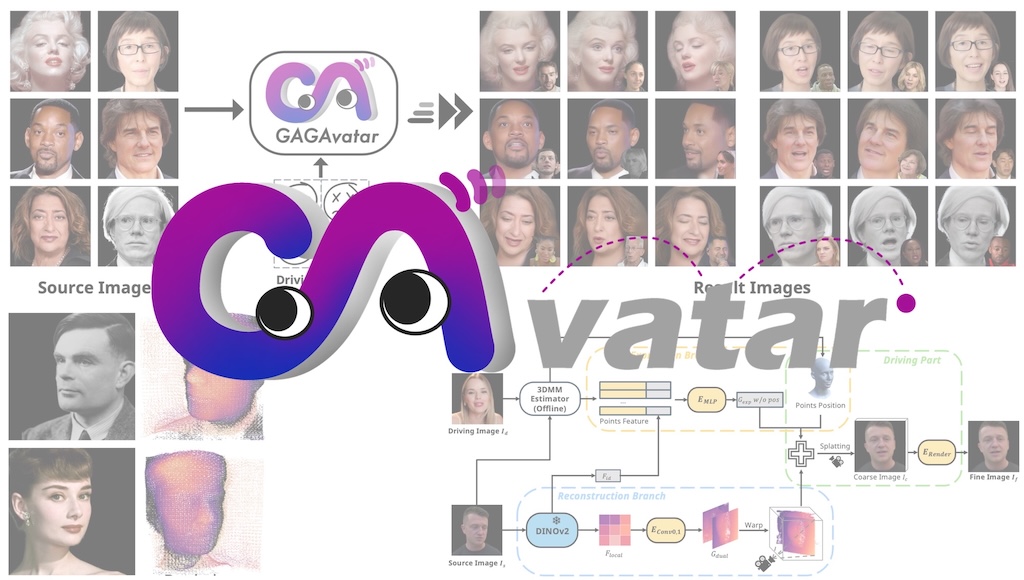

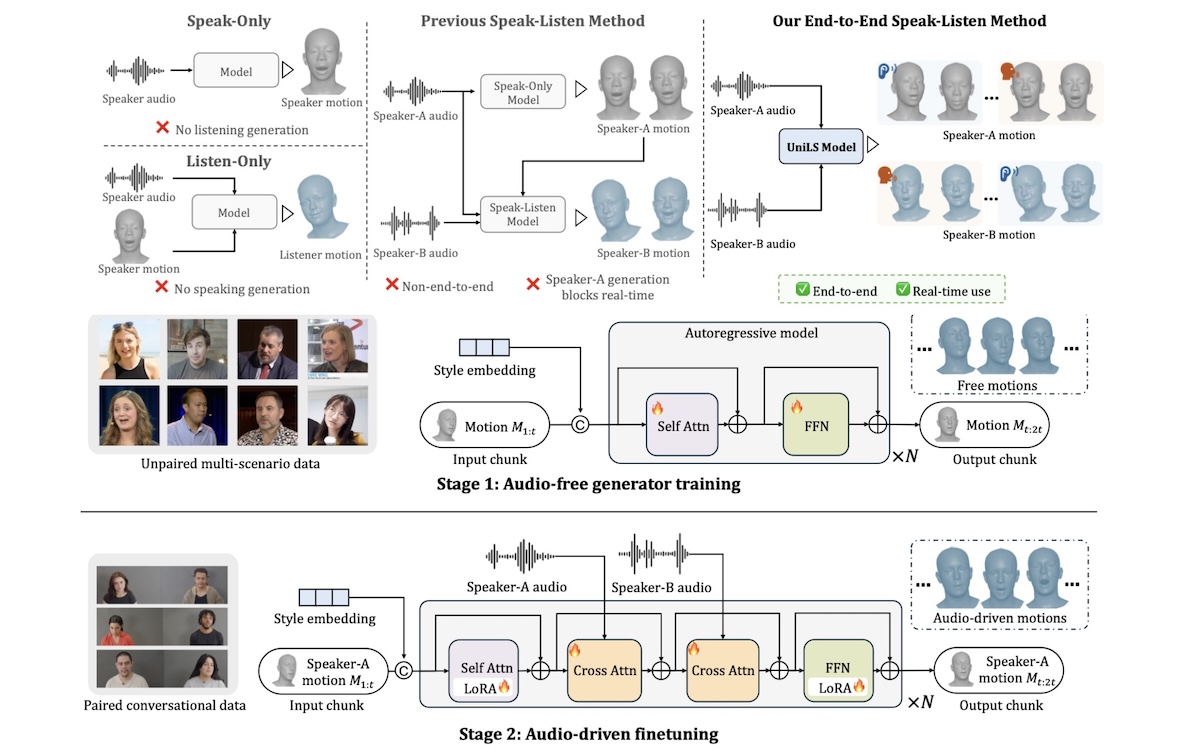

UniLS: End-to-End Audio-Driven Avatars for Unified Listening and Speaking

UniLS generates realistic, dual-track audio-driven expressions for both speakers and listeners; this work is part of the MIO: Towards Interactive Intelligence for Digital Humans project.